Lambda Building Blocks

AWS Lambda service consists of a few parts; working to give us serverless computing capability. In this article, I want to break down those components and give you a quick brief on each.

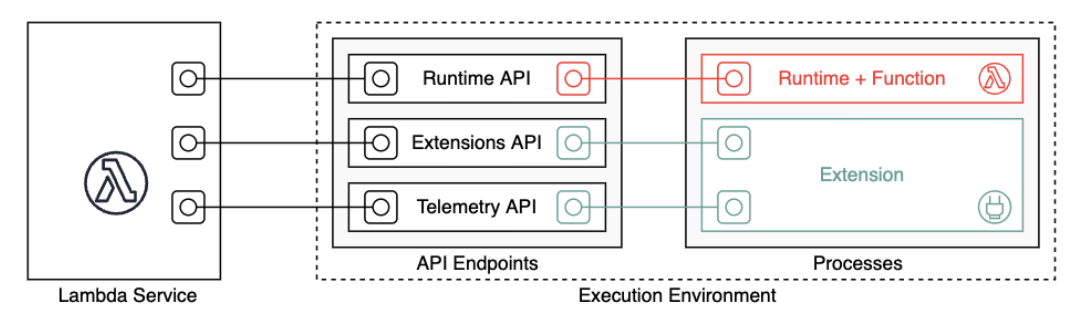

Lambda Service

I’m using Lambda service here to denote the control plane for the aws service, this is the part responsible for the configuration of the lambda instance (memory limit, environment variables, etc.), and it’s the part that receives the lambda binaries and spins instances to run them on demand.

Execution Environment

Execution Environment is the sandbox lambda service uses to run your code in isolation; aws uses firecracker as a lightweight isolation VM for this purpose. Each execution environment has a copy of your code, and it can run in response to an event received by lambda; lambda service can reuse a previously running execution environment to handle incoming events, or it can provide a new one.

Runtime

one of the goals of lambda is to allow developers to focus on writing the event-handling code and the custom business logic around it and abstract the code needed to execute handlers in response to events. Lambda service achieves this goal through the use of a Runtime, the runtime in conjunction with runtime API, queries the lambda service to get events to execute and calls the handler code defined by the developer; it also provides the glue for integrating with some aws services, for example, it receives logs and forward them to cloudwatch, and also adds tracking using x-ray service.

One interesting fact about the runtime and the running of lambda handlers is that even though the lambda service is a push-based model, where the lambda service triggers and spins a new execution environment in response to coming events, the runtime works in a pull-based model, where it uses a GET endpoint provided by the runtime API to pull the next pending event to execute, pass it to the defined handler and return the response to the client (client, in this case, is the client/user calling or triggering the lambda service).

All of the above components, plus your handler code, are the minimum required components to run your lambda. There are extra optional components that you can opt-in for added features:

Extensions

Lambda extensions are extra code apart from your handler(s) that you can run to provide functionality within your lambda. The additional functionality could be an integration with a monitoring provider (like datadog or honeycomb to name a few) or a cross-cutting concern you want to implement once for all of your handlers. Extensions work similarly to runtime by pulling invoke events from lambda service using Extensions API and execute extension code when Next endpoint returns an event. One thing to note here is that extensions can increase the cold start of your lambda function as they participate in the init phase.

Telemetry API and Telemetry Extensions

Lambda and the execution environment have a wealth of telemetry information that it tracks, and those include either:

- Platform telemetry – Logs, metrics, and traces which describe events and errors related to the execution environment runtime lifecycle, extension lifecycle, and function invocations.

- Function logs – Custom logs generated by Lambda function code.

- Extension logs – Custom logs generated by Lambda extension code. Any extension can register a receiver with the lambda service, and lambda will stream those metrics to an HTTP (or TCP) endpoint local to the execution environment. From there is up to the extension to process each telemetry. An interesting thing to note, while the lambda handlers and regular extensions (through the runtime and extension APIs) use a pull-based model to receive events, telemetry extensions receive telemetry in a push model, where the lambda service will patch and deliver telemetry to the local endpoint defined with subscription API.